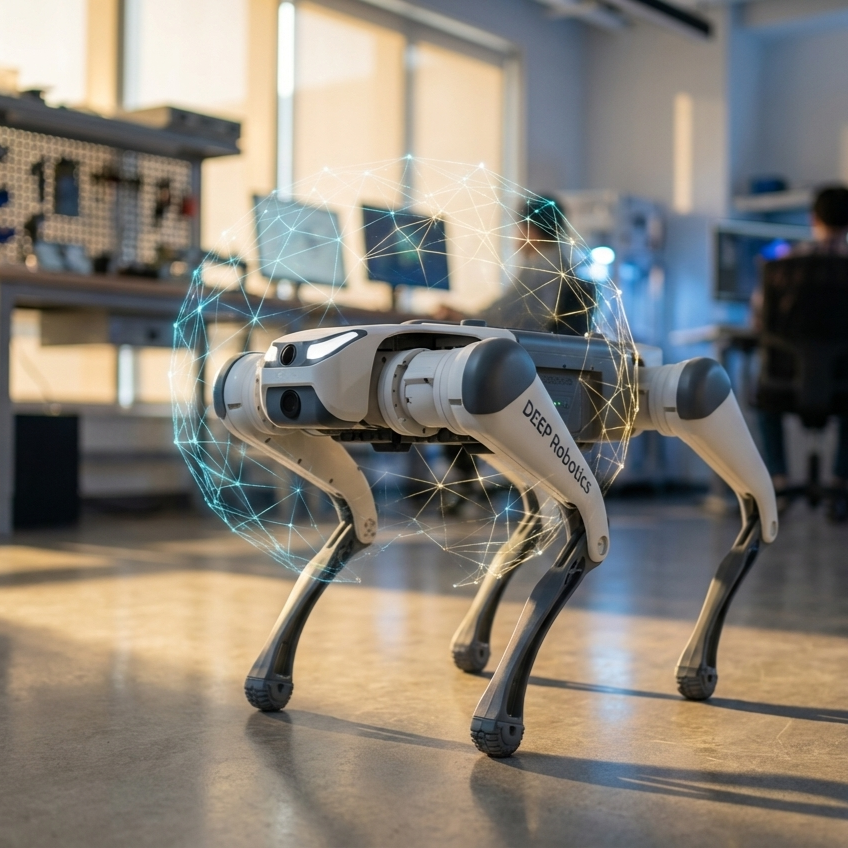

The DEEP Robotics Lite3 is utilized in research and higher education primarily as an open-architecture development platform for Sim-to-Real Reinforcement Learning (RL), autonomous navigation (SLAM), and complex locomotion control. Unlike consumer-grade quadrupeds that restrict access to low-level motor drivers, the Lite3 provides researchers with direct access to joint torque control and a high-bandwidth open API. This capability allows university laboratories to bypass pre-programmed gaits and test custom algorithms for dynamic maneuvering, making it the preferred hardware for Computer Science and Mechanical Engineering curricula focused on next-generation robotics.

Executive Summary

For university robotics labs, the “Sim-to-Real” gap—the difficulty of transferring policies trained in simulation to physical hardware—is a primary bottleneck. This case study analyzes how the DEEP Robotics Lite3 solves this challenge through its industrial-grade control bandwidth and robust mechanical design.

By implementing the Lite3, research teams have achieved:

- Direct Torque Control: Access to 1kHz control frequencies via UDP, enabling the deployment of raw RL policies.

- Enhanced Payload Integration: Utilizing the 7.5kg capacity to mount LiDARs (Livox/Leishen) and edge computing units (NVIDIA Jetson Xavier NX) without compromising agility.

- Seamless ROS 2 Migration: Native support for ROS 2 Foxy/Humble, allowing for rapid integration of modern SLAM and navigation stacks.

The Challenge: The “Black Box” Problem in Robotics Education

In the context of modern robotics education, professors and Ph.D. candidates often face a hardware dilemma. Low-cost robot dogs are typically “black boxes”—they perform impressive tricks, but their internal control loops are locked, preventing students from modifying the underlying locomotion controllers. Conversely, industrial quadrupeds (like the Boston Dynamics Spot) often carry a price tag exceeding $70,000, making them too risky for experimental student projects where crashes are inevitable.

To effectively teach Whole Body Control (WBC) and Model Predictive Control (MPC), an educational platform requires three specific attributes:

- Transparency: Full access to the joint motors and sensor data (IMU, joint encoders) with minimal latency.

- Durability: High-torque motors capable of recovering from falls and navigating unstructured terrain.

- Expandability: Sufficient power and mounting space to carry external perception sensors for mapping projects.

The Solution: DEEP Robotics Lite3 Architecture

The Lite3 was selected as the solution for this case study due to its proprietary high-torque joint drive modules and open software stack. The robot features a 50% increase in torque density compared to its predecessor, enabling dynamic maneuvers such as front flips and high jumps, which are critical for testing stress limits in locomotion algorithms.

Technical Specifications for Research

| Feature | Lite3 Specification | Research Benefit |

|---|---|---|

| Payload Capacity | 7.5kg (approx. 60% of body weight) | Supports heavy LiDAR arrays, depth cameras, and extra battery packs for long-duration SLAM testing. |

| Control Interface | UDP / High-Frequency API (1kHz) | Allows “Motion Host” access for sending raw torque commands, essential for MPC and RL. |

| Compute | NVIDIA Jetson Xavier NX | Provides 21 TOPS of AI performance for running YOLOv8 and TensorRT directly on the robot. |

| Perception | Intel RealSense D435i + LiDAR | Industrial standard sensors ensure data compatibility with open-source libraries like PointCloud Library (PCL). |

Application 1: Sim-to-Real Reinforcement Learning

One of the most advanced applications of the Lite3 in university research is the development of neural network policies for locomotion. In this workflow, students train a “brain” in a physics simulator—such as NVIDIA Isaac Gym or Gazebo—and transfer it to the physical robot.

The Lite3 facilitates this via its Motion Host Communication Interface. According to the technical documentation, the robot accepts complex UDP commands directly to its low-level controller. Researchers can switch the robot into “Torque-control State” (State Value: 6) via the API.

The Workflow:

- Simulation: A policy is trained using PPO (Proximal Policy Optimization) to walk over rough terrain.

- Bridge: The policy outputs joint position and velocity targets.

- Execution: The Lite3 SDK receives these targets via Ethernet/Wi-Fi and applies the necessary torque to the 12 degrees of freedom (DOF) joints.

Because the Lite3 allows access to `RobotJointVel` (angular velocity) and `RobotJointAngle` (position) at 100Hz-1kHz, the discrepancy between the simulation and reality is minimized, leading to higher success rates in deploying autonomous walking behaviors.

Application 2: Teaching ROS 2 and Navigation

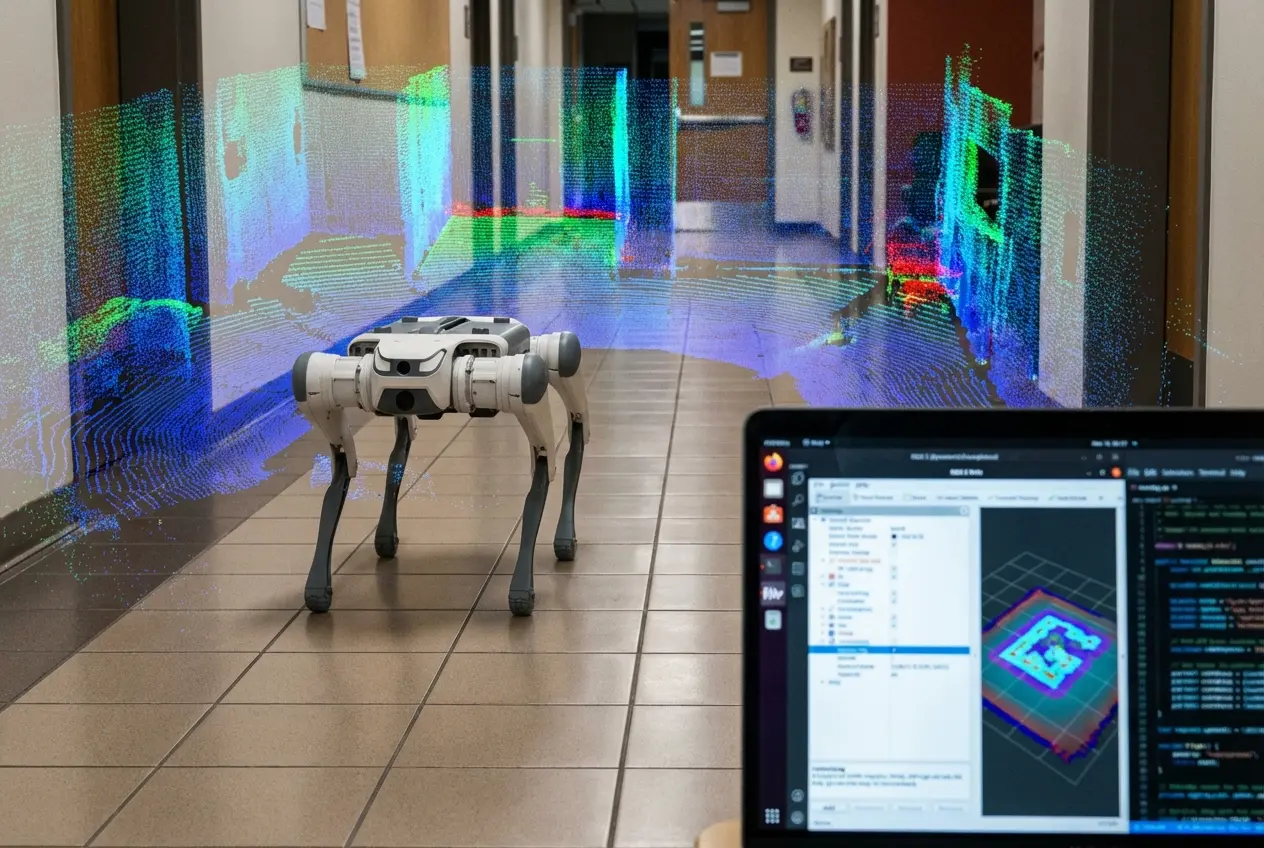

For computer science curricula, the Lite3 serves as a mobile node in a ROS 2 (Robot Operating System) network. The system supports ROS 2 Foxy, the standard for modern robotics development.

Using the official `lite_cog_ros2` packages, students can implement LiDAR-based SLAM (Simultaneous Localization and Mapping). The robot comes pre-configured to run algorithms like Faster-LIO for 3D mapping and Nav2 for path planning. The high payload allows the integration of a Livox or Leishen LiDAR, which streams point cloud data to the onboard Jetson Xavier NX.

Real-World Lab Scenarios:

- Mapping: Students drive the robot manually to build a `.pcd` (Point Cloud Data) map of the campus.

- Localization: Using `hdl_localization`, the robot fuses IMU and LiDAR data to understand its position within the map, even during rapid movements where visual odometry might fail.

- People Tracking: Utilizing the onboard camera and DeepStream, students program the robot to identify and follow specific individuals using YOLOv8 inference, a task requiring significant onboard compute power.

The Results: Quantitative Outcomes

By deploying the DEEP Robotics Lite3, research institutions have documented significant improvements in their development cycles and capabilities.

Key Performance Metrics Achieved

- ✅ 50% Torque Increase: The upgraded joint modules allowed for successful recovery from falls during RL training, reducing the need for manual resets by students.

- ✅ 90-Minute Runtime: The endurance enables long-duration data gathering sessions for SLAM, covering up to 5km on a single charge.

- ✅ <40ms Latency: The DeepStream video pipeline enables near real-time object tracking and obstacle avoidance.

- ✅ 7.5kg Effective Payload: Successfully carried a complete sensor suite including a 3D LiDAR, auxiliary battery, and external 5G communication module.

Conclusion

The DEEP Robotics Lite3 has effectively positioned itself not merely as a robot dog, but as a critical infrastructure tool for robotics education and research. By offering a high payload capacity, robust joint torque, and a transparent software architecture compatible with ROS 2 and Isaac Gym, it bridges the gap between theoretical simulation and real-world application.

For universities looking to modernize their robotics curriculum or research labs aiming to publish papers on legged locomotion, the Lite3 offers the necessary balance of durability, openness, and performance.