Key Takeaways for Educational Buyers:

- The “Goldilocks” Solution: Lite3 fills the gap between hobbyist units (like Unitree Go2) and industrial units (Boston Dynamics Spot).

- Open Architecture: Features a “Split-Brain” design separating high-level AI (NVIDIA Jetson) from low-level motion control.

- Research Ready: Native support for ROS 2 Humble, Reinforcement Learning (RL) pipelines, and Sim-to-Real workflows.

- Lab-Friendly Specs: 7.5kg payload capacity allows for custom LIDAR or robotic arm integration, with swappable batteries for continuous lab sessions.

For years, university procurement departments faced a binary choice in legged robotics: purchase fragile, “black-box” consumer toys that break under student experimentation, or spend upwards of $75,000 on a single industrial unit like Spot. Neither option served the dual mandate of teaching fundamentals and conducting novel research.

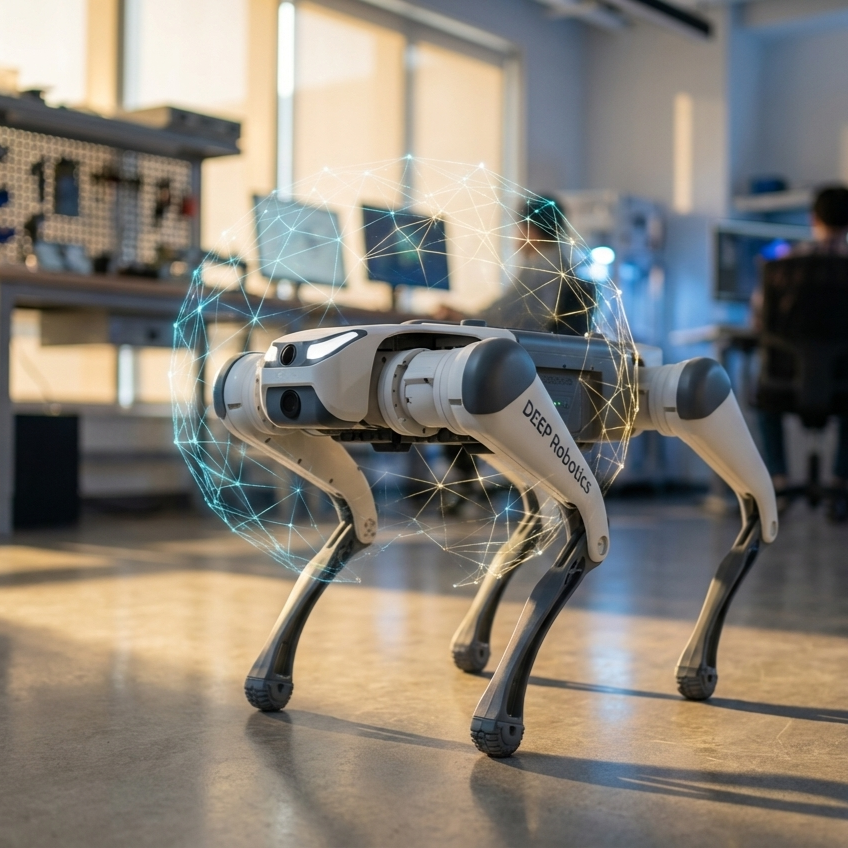

The DEEP Robotics Lite3 has emerged as the standard “Goldilocks” solution for higher education. By combining an industrial-grade metal composite chassis with a fully open software stack, it provides the durability required for undergraduate classrooms and the torque control transparency needed for PhD-level Reinforcement Learning (RL) research.

What is the DEEP Robotics Lite3?

The Lite3 is a mid-sized quadruped robot featuring a modular “split-brain” architecture. It combines real-time torque control (RK3588 Motion Host) with high-level AI perception (NVIDIA Jetson Xavier/Orin), making it the primary platform for Physical AI and Sim-to-Real research in universities.

1. The “Split-Brain” Architecture: Safe for Student Code

One of the primary fears in a robotics lab is a student deploying code that damages the hardware. The Lite3 addresses this through a distinct hardware separation that protects the robot’s core stability while allowing full experimental freedom.

The Motion Host (Real-Time Safety)

At the lowest level, the robot runs on an RK3588 Motion Host. According to the Lite3 Motion Host Communication Interface specifications, this unit manages the high-frequency (1kHz) control loops. It handles:

- Joint Torque Control: Direct access to motor drivers via UDP.

- Safety Limits: Hard-coded thermal and position limits that override user commands if they threaten the hardware.

- State Estimation: Processing IMU and encoder data to maintain balance.

The Perception Host (AI & Navigation)

The “Brain” of the robot is an NVIDIA Jetson Xavier NX (or Orin in upgraded models). This runs a standard Ubuntu environment (20.04/22.04), giving students root access. Here, researchers can deploy:

- Visual SLAM: Using the onboard Intel RealSense D435i.

- LiDAR Mapping: Drivers for Livox or YDLidar integration.

- Neural Networks: Running PyTorch or TensorFlow inference for object detection (YOLOv8).

2. Native ROS 2 Support and Sim-to-Real Workflows

Modern robotics curriculum is shifting rapidly to ROS 2 (Robot Operating System 2). Unlike competitors that require complex “bridge” software, the Lite3 supports ROS 2 natively.

| Feature | Lite3 Implementation | Research Benefit |

|---|---|---|

| Middleware | ROS 1 (Noetic) & ROS 2 (Foxy/Humble) | Supports legacy codebases and modern curriculum. |

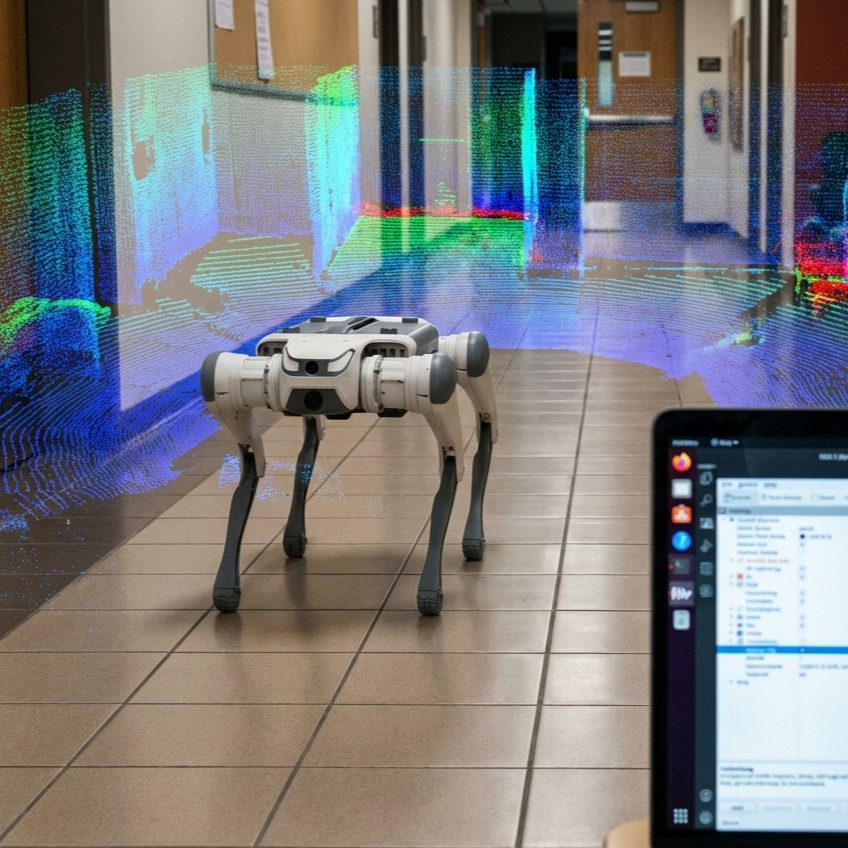

| SLAM | Faster-LIO & hdl_graph_slam | Industry-standard mapping algorithms pre-configured. |

| Navigation | Nav2 Stack | Allows for autonomous path planning teaching. |

The Reinforcement Learning (RL) Pipeline

The Lite3 is widely cited as the “Hello World” platform for Embodied AI. A typical Master’s or PhD workflow involves:

- Training: Students train a walking policy in NVIDIA Isaac Gym or Isaac Lab using PPO (Proximal Policy Optimization).

- Domain Randomization: Varying friction and mass in simulation to make the policy robust.

- Deployment: Transferring the policy directly to the Lite3’s Jetson unit via the open Python API.

- Zero-Shot Transfer: Researchers have successfully demonstrated that policies trained on flat ground in sim can traverse stairs on the real Lite3 without fine-tuning, thanks to its accurate torque control.

3. Hardware Specs: Why Payload Matters

In a research setting, a robot is rarely used “naked.” It carries sensors, additional compute units, or robotic manipulators. The Lite3’s industrial design offers significant advantages here.

- 7.5kg Effective Payload: Unlike entry-level units that struggle with more than 3kg, the Lite3 can carry a 3D LiDAR puck (like a Velodyne or Ouster), an additional battery, and a custom robotic arm simultaneously.

- Peak Torque ~50Nm: High torque density allows for dynamic maneuvers. The robot can perform backflips and recover from falls—capabilities that are essential for testing robust locomotion algorithms.

- Swappable Battery System: The 126.72 Wh battery offers 1.5–2 hours of runtime. Crucially, it is external and swappable, meaning a lab with 3 batteries can run experiments continuously all day.

4. Step-by-Step: Initializing a Lite3 for Research

For lab managers integrating the Lite3, the setup process is designed to be straightforward.

Step 1: Network Configuration

The robot broadcasts its own WiFi hotspot. According to the Perception Development Manual, you connect your development PC to this network and use NoMachine or SSH to access the robot (IP: 192.168.1.103).

Step 2: Checking ROS Versions

The Lite3 comes pre-installed with scripts to switch environments. You can toggle between ROS 1 and ROS 2 using the built-in script:

sudo ./switch_ros_version.sh ros2

Step 3: Launching LiDAR Mapping

To start a SLAM session, the robot utilizes the `faster-lio` package. Users simply run the `start_slam.sh` script, which launches the LiDAR driver (Livox or Leishen), the mapping node, and RViz for real-time visualization.

Frequently Asked Questions (FAQ)

Can I use custom sensors with the Lite3?

Yes. The Lite3 features a hardware expansion port offering USB 3.0, HDMI, and Ethernet. More importantly, it provides external power outputs at 5V, 12V, and 24V. This allows universities to mount expensive LIDAR units, gas sensors, or additional NVIDIA Jetson Orin modules directly to the robot without needing separate battery packs.

Is the robot safe for students to program low-level gait algorithms?

Yes. The Lite3 utilizes a “soft emergency stop” protocol. If the robot detects a roll/pitch angle exceeding safety limits (due to bad student code), it enters a Lose Control Protection State (State Value 8), cutting motor power to prevent hardware damage. This encourages experimentation by lowering the risk of catastrophic failure.

Does it support Reinforcement Learning out of the box?

The Lite3 is “RL-Ready.” It provides the low-level API access (Torque Control Mode) necessary for RL policies. The manufacturer and community provide open-source repositories (such as `Lite3_rl_deploy`) that bridge the gap between simulation environments like Isaac Gym and the real robot hardware.

Conclusion: The Smart Choice for Higher Ed

For universities, the goal is to provide students with relevant, industry-transferable skills while operating within a finite budget. The DEEP Robotics Lite3 succeeds by offering the mechanical robustness of an industrial tool with the open-source flexibility of a development kit. By standardizing on Lite3, labs can effectively teach everything from basic kinematics to advanced Reinforcement Learning, making it the ultimate quadruped research platform for the modern curriculum.